Our founder needed something straightforward: A/B test a few AI models, compare results, pick the winner. Should’ve taken an afternoon. It took weeks.

The tooling landscape was a disaster. Notebooks that couldn’t talk to production. Model APIs with different authentication schemes. Retrieval systems that required their own infrastructure. Prompts versioned in spreadsheets.

Every “simple” experiment meant wiring together five different services, debugging authentication flows, and praying nothing broke overnight.

Open source wasn’t the answer. Tools looked promising until production. Then you’re maintaining infrastructure that isn’t your core business, patching security vulnerabilities in libraries you barely understand.

Secrets management was a nightmare. API keys scattered across .env files, config repos, developer laptops, and Slack DMs. Zero visibility until someone gets an eye-watering invoice.

The ecosystem never stops moving. New models drop monthly. Frameworks get deprecated. Best practices change quarterly. Dev tooling is important until it competes with shipping features.

More problems we kept hitting:

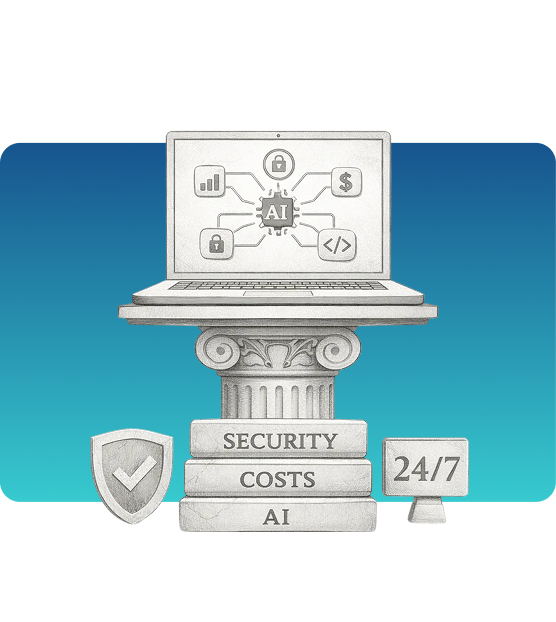

Costs were brutal and opaque

GPU time, API calls, storage, embeddings — pricing designed by people who hate clarity. "Why did our bill triple?" Good luck tracing that.

Security and compliance had no answers

What data are we sending to these models? Do we have audit logs? Can we prove we're not leaking PII? Usually "we're not sure."

The paradox of choice

LangChain or LlamaIndex? Which embedding model? Fine-tune or RAG? Teams spend weeks evaluating tools instead of building.

Knowing where AI actually helps

Not every task needs a language model. Without a way to quickly prototype, teams over-engineer simple problems or miss real opportunities.

So we did what engineers do. We built scripts. Then more scripts. Then a framework to manage the scripts. Then we realized we’d accidentally built a platform.

We wanted a happy path. A way for teams to just go build with AI — without the months of infrastructure work, without the security theater, without the tool evaluation paralysis.

We kept the bar high. Enterprise security from day one. Centralized secrets management. Cost visibility baked in. Clean architecture. The kind of engineering discipline you need when things have to work at 3am.

Need to move fast? Our hosted platform gets you building in minutes.

Need to keep data on-prem? Deploy the same platform in your own environment.

Data residency requirements? We deploy in any region.

Can’t send data outside your walls? Airgapped deployments with self-hosted models.

Desktop tools are free. Same experience offline and online. No bait-and-switch.

Pay for what you need, when you need it. No massive upfront contracts.

Data scientists frustrated by deployment complexity. ML engineers drowning in infrastructure. Security teams blocking AI projects because governance was an afterthought.

Notebooks for data exploration. Multi-model orchestration to compare providers. RAG pipelines that scale. An agent framework for autonomous workflows. Model hosting for teams who don’t want to manage GPUs.

But notebooks weren’t enough. Production code needed version control, debugging, proper tooling. So we built the IDE. Now teams move from experiment to production without switching platforms.

As our agent capabilities grew, we needed a way to manage them — monitor behavior, enforce policies, track what they were doing and why. So we built an internal governance layer.

Customers saw it. They wanted it. Not just for Calliope agents — for all their agents, regardless of where they were built.

That internal tool is becoming Zentinelle: a standalone platform for AI governance, agent management, and policy enforcement across any AI system. Coming soon.

In Greek mythology, Calliope was the Muse of epic poetry — the divine inspiration behind stories that moved civilizations. She didn't write the epics herself. She helped creators bring their visions to life. That's what we're building. Not AI that replaces you — AI infrastructure that amplifies what you can create.